VLSI Lab Wins ISLPED Design Contest Award

Professor Jie Gu and members of his Very Large-Scale Integration Lab team won the Design Contest Award at the premier ACM/IEEE International Symposium on Low Power Electronics and Design

Northwestern Engineering’s Jie Gu and members of his Very Large-Scale Integration (VLSI) Lab are developing artificial intelligence chips, specialized accelerator hardware designed to perform AI tasks 100-1000X more efficiently than existing processors.

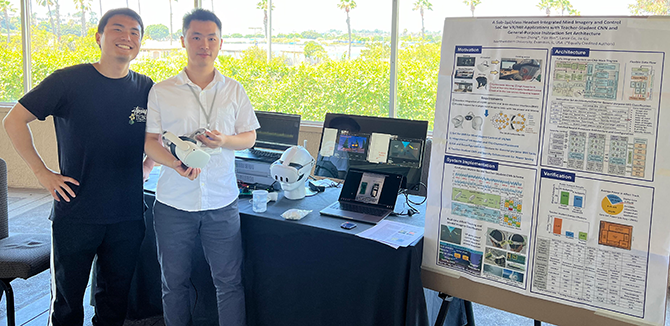

Gu, along with computer engineering PhD student Zhiwei Zhong, Yijie Wei (PhD ’23), and Lance Go (’24) won the Design Contest Award for their work in applied AI chip technology at the ACM/IEEE International Symposium on Low Power Electronics and Design (ISLPED), held August 5 – 7 in Newport Beach, California.

At the conference, Zhong and computer engineering PhD student Xi Chen presented a live demonstration of the VLSI team’s brain-machine interface built with a novel AI-empowered neural processing system-on-a-chip (SoC). Integrated into the Meta Quest 2 virtual reality (VR) headset, the system employs users’ brainwaves to control the live scenes of a VR game or menu selections.

The work builds on a paper originally published at the 2024 IEEE International Solid-State Circuits Conference, titled “A Sub-1μJ/class Headset-Integrated Mind Imagery and Control SoC for VR/MR Applications with Teacher-Student CNN and General-Purpose Instruction Set Architecture.”

The system’s integrated AI operations support four tasks, including mental imagery, using the imagination of pictures to issue control command; real-time emotional state tracking and control during gaming or video watching; motor imagery, using imagination of limb movement for computer control; and Steady State Visual Evoked Potential.

“For the first time, we integrated a ‘brain’ interface into a virtual reality headset so that users' minds can be read by VR and used for controlling the VR scene and activities through a tiny integrated AI chip,” Zhong said. “In the human-machine interface, neural signals have not been well utilized for daily usage. This project allows such valuable information to be captured and processed, bolstering new capabilities for wearable devices.”