Teaching Robots to Help

Nick Morales used his final project in the MSR program to investigate a form of robotics that enables machines to predict human intent to move and position large, heavy objects.

Anyone who has ever used a wheelbarrow to try and get something from one place to another knows the challenge of moving heavy materials without assistance.

But what if the wheelbarrow knew where you wanted to go and could actually help get those heavy materials where you wanted them with just a tiny push?

That concept was at the core of Nick Morales's (MSR ‘23) final project for Northwestern Engineering's Master of Science in Robotics (MSR) program. Morales, who worked with professor Kevin Lynch and MSR co-director Matthew Elwin, investigated how to train a robot to assist in a task without it knowing exactly what its human counterpart wanted it to do.

“I was fascinated by how, using just a few human demonstrations, you could teach a robot to complete a task without explicitly specifying what the end goal was,” Morales said. “That's powerful, since specifying objectives in a way that takes into account the many scenarios that a robot can encounter is very difficult.”

Again, think of that wheelbarrow. For a robotics-empowered device to get your heavy materials from Point A across a yard filled with divots and other obstacles to Point B, it has to have next-level intuition to predict the intent of its human user.

Morales’s project demonstrated it was possible using machine learning to teach a robot to take training input on things such as force and direction and then assist by using its steel structure and powerful motors to do the heavy lifting and hard work.

For that to happen, Morales needed to understand diffusion policies: the same type of ML algorithms that power AI generation algorithms such as DALL-E, Midjourney, and Stable Diffusion.

Put simply, it takes what it is taught by humans and then teaches itself more so it can be of greater assistance.

“I find autonomy, especially for complex tasks, a difficult but engaging problem,” Morales said. “(Diffusion) policy is at the forefront of using generative machine learning techniques to empower robotic autonomy, so I was eager to try it out."

That teaching-learning process was the main challenge Morales had to address.

“One of the biggest takeaways is that, when doing machine learning research like this, the framework you set up to collect data, train, evaluate performance, and keep track of everything is as -—if not more — important than the actual implementation and design of the model,” he said. “As with all machine learning, the amount, type, and quality of data is critical to the success of the model.”

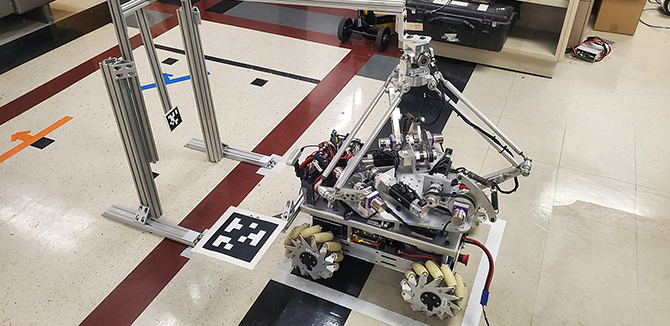

Morales’s work focused on a particular type of robot called omnidirectional mobile cobots, or omnids for short, which were developed at Northwestern and led by Elwin and Lynch. The omnids are designed for collaborative mobility of delicate or flexible payloads and at the direction of humans. But the omnids — and Morales's project — are not just about movement.

In practical applications, properly trained omnids allow a small number of humans to assemble large structures, such as the blade of a wind turbine or perhaps even a solar panel on Mars. Tiny movements from humans can be correctly interpreted by the robot to assist in precisely positioning a large, heavy object.

As with many MSR projects, Morales benefited from collaboration with members of his cohort, specifically fellow student Hang Yin (MSR '23), who worked on the omnids with him and tried out an additional framework for generating robot actions. Morales said that collaboration helped him complete a lot of work in not a lot of time.

“We got way more work done together than either of us could have alone,” he said. “I'm excited to see the pipeline we've created continue to help researchers at Northwestern, and I can't wait to see where this research goes in the future.”