Examining Algorithmic Fairness and Accountability

During a workshop held at Northwestern University on November 18-19, IDEAL and the EnCORE Institute explored key socio-technical topics, including accountability and fairness in resource allocation, clustering and ranking, and prediction.

The accuracy and robustness of computational models is only one side of the equation.

The field of algorithmic fairness and accountability investigates the decision-making capabilities of data-driven systems through the lens of transparency, explainability, reliability, trustworthiness, and potential social impact.

On November 18-19, the Institute for Data, Econometrics, Algorithms, and Learning (IDEAL) and the Institute for Emerging CORE Methods for Data Science (EnCORE) cohosted a workshop at Northwestern exploring key socio-technical topics, including fairness in resource allocation, clustering and ranking, and prediction; accountability; and the application of these concepts across disciplines such as artificial intelligence, biology, and law.

Program

Convening researchers from computer science, economics, mathematics, electrical engineering, and statistics, the workshop featured talks and interactive discussions.

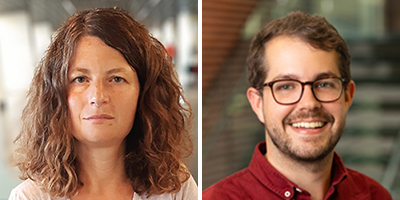

Northwestern Engineering’s Edith Elkind and Sean Sinclair outlined their approaches to balancing fairness and efficiency with the challenge of allocating limited resources to maximize public benefit.

Elkind, Ginni Rometty Professor of Computer Science at Northwestern Engineering, discussed the fair and efficient design of transportation networks.

Sinclair, assistant professor of industrial engineering and management sciences at Northwestern Engineering, tackled an online fair allocation problem in which a decision-maker has a budget of scarce resources to allocate over a fixed number of rounds.

Using the practical example of a food bank distribution system, Sinclair and collaborators Siddhartha Banerjee (Cornell University) and Chamsi Hssaine (University of Southern California) constructed a sequence of perishable food allocations that is equitable and efficient while considering the impact of demand uncertainty. Sinclair noted the potential application of this work in other fairness and perishability settings, such as vaccine distribution, electric vehicle charging, and federated cloud computing.

The event was organized as part of the IDEAL Fall 2024 Special Program on Interpretability, Privacy, and Fairness by Khuller, Rajni Dabas, visiting assistant professor of computer science at the McCormick School of Engineering, Sainyam Galhotra (Cornell University), Parikshit Gopalan (Apple), Aaron Roth (University of Pennsylvania), and Barna Saha (University of California San Diego).

Additional speakers at the workshop included:

- Arpita Biswas (Rutgers University) – “Fair Allocation of Conflicting Indivisible Resources”

- Bhaskar Ray Chowdhury (University of Illinois Urbana-Champaign) – “Fair and Stable Data Exchange Economies”

- Ian Kash (University of Illinois Chicago) – “Fair AI for Rich Decisions”

- Marina Knittel (University of California San Diego) – “Individually Fair Rank Aggregation and Pivot Algorithm”

- Aleksandra Korolova (Princeton University) – “Analyzing Meta's Implementation for Ensuring Fairness in Ad Delivery”

- Pooja R. Kulkarni (University of Illinois Chicago) – “Approximating Nash Social Welfare and Beyond Additive Valuations”

- Kamesh Munagala (Duke University) – “Majorized Bayesian Persuasion and Fair Selection”

- Ariel Procaccia (Harvard University) – “Generative Social Choice”

- Stavros Sintos (University of Illinois Chicago) – “Improved Approximation Algorithms for (Fair) Relational Clustering”

- Aravind Srinivasan (University of Maryland) – “Fairness, Randomization, and Approximation Algorithms”

- Ali Vakilian (Toyota Technological Institute at Chicago) – “Exploring Fairness in Clustering: Definitions, Techniques, and Applications”

- Shirley Zhang (Harvard University, former Northwestern Computer Science visiting student) – “Honor Among Bandits: No-Regret Learning for Online Fair Division”

IDEAL and EnCORE

IDEAL and EnCORE are multi-institution and transdisciplinary institutes supported by US National Science Foundation Harnessing the Data Revolution (HDR): Transdisciplinary Research in Principles of Data Science (TRIPODS) Phase II awards.

IDEAL partners include more than 75 investigators in computer science, economics, electrical engineering, law, mathematics, operations research, and statistics across Northwestern University, Google Research, the Illinois Institute of Technology, Loyola University Chicago, Toyota Technological Institute at Chicago, the University of Chicago; and University of Illinois Chicago.

Led by the University of California San Diego, the EnCORE team includes collaborators at the University of California, Los Angeles; University of Pennsylvania; and the University of Texas at Austin.

EnCORE will host a second workshop on fairness and accountability in the spring.